INTRODUCTION

National concerns regarding an improved awareness of patient safety and quality of care within the US health care system have driven expectations for a minimal competence level in quality improvement/patient safety (QIPS) from contemporary physicians. It has been increasingly recognized that physicians should be engaged in QIPS activities to make the systems through which they provide patient care perform more safely and reliably.1,2

In order to ensure that our future physicians embed this aspect of practice into their work, there also is a growing mandate for the effective integration of QIPS training into graduate medical education (GME).3 A broader term used within Next Accreditation System documents has been scholarly activity, referring to both healthcare systems-oriented QIPS projects and formal controlled research studies.4,5

The overall rationale for such integration of QIPS content into GME curricula is a belief that when residents develop and apply project design/conduction skills, they will continue to participate in such activities once they enter post-residency practice.3,5 In this respect, the Accreditation Council for Graduate Medical Education (ACGME) 2013 Common Program Requirements establish that ‘‘residents must demonstrate the ability to investigate and evaluate their care of patients, to appraise and assimilate scientific evidence, and to continuously improve patient care based on constant self-evaluation and life-long learning.’’ 6(p11)

It is now also considered mandatory by the ACGME that residents learn to ''systematically analyze practice using QI methods and implement changes with the goal of practice improvement."6 An ongoing challenge for GME leaders and faculty, however, has been the lack of specific curricular frameworks to help residents plan out QIPS projects in diverse community-based residency program settings.7–9

The importance of establishing coordinated scholarly activity (SA) project expectations and timelines for residency programs has been suggested by a growing number of GME experts.3,10 During recent years it also has been proposed that coordinated efforts by GME officials from multiple residency programs and systems may enable them to more efficiently share resources, resulting in more QIPS projects and products.3,11,12

In terms of preparing residents for QIPS project experiences, some authors have reported achieving increased momentum from the establishment of cross-program project teams with shared project interests.13–17 Other GME groups have specifically implemented residency program timelines during which earlier-year residents develop specialty-related project conduction skills to lead their own later-year projects.3,13,18–20

Similar to what the authors have seen in several affiliated healthcare systems, councils or committees have been established in some settings to coordinate resident or faculty-resident project planning.17,21,22 Some GME publications have described rotations for residents to work individually with healthcare system QIPS department personnel to generate their own subsequent QIPS project ideas.23–26

Since they lacked an institution-wide QIPS curriculum, GME faculty (CM, JU) at Detroit Wayne County Health Authority (Authority Health) in Detroit, MI concluded that it was first necessary to determine their residents’ baseline QIPS project-related confidence levels. The development of this study started as an 18-month Teach for Quality GME project coordinated by the third author (BC). The Association of American Medical College’s Teach for Quality program was designed in 2013 to equip clinical faculty to lead, design, and evaluate effective QIPS projects.27 The authors (BC, WC) from the Statewide Campus System 28 (SCS) of the Michigan State University College of Osteopathic Medicine adapted the training program to support a cohort of GME faculty learners through a consortium model of QIPS coaching supports and materials. The overall results of this QIPS-oriented curricular series of 13 developed projects have been reported in another paper available from the authors.22

PROJECT PURPOSE

This exploratory pilot study was conducted to identify how residents’ personal and residency program characteristics, demographics, and previous QIPS project experience might influence residents’ perceived confidence levels to develop and conduct prospective QIPS projects. The authors’ intent is that the results from this project inform future work and integration of QIPS content and mentoring into residents’ training at Authority Health and at other individual residency programs. The overall null hypothesis of the study was that there would be no statistically significant differences between subgroup QIPS confidence level responses based on any residents’ personal or residency program characteristics.

METHOD

After obtaining IRB approval, a total non-probability convenience sample of 55 resident physicians from five residency programs (Family Medicine, Internal Medicine, Obstetrics and Gynecology, Pediatrics, and Psychiatry) at Authority Health were surveyed by the first two authors (CM, JU) from 09/28/2015 to 01/06/2016 using online Survey Monkey software.29 This software allowed for anonymous survey submissions and easy extraction of raw response data. Prospective respondents were first emailed a survey link through their residency program emails, and later reminded to consider participating in the study through additional emails and by the first two authors (CM and JU) at agency/program staff meetings.

The complete 38-item project survey included a QIPS project confidence portion derived from the 31-item Quality Improvement Confidence Instrument (QICI) developed and psychometrically validated by Hess et al. in 2013.30,31 After respondents were asked seven questions about their personal and residency program characteristics, they were presented with 31 questions about their perceived confidence in their ability to develop and conduct QIPS projects. Each of the 31 items used a five-point scale ranging from Not At All Confident to Very Confident. The six subscales of the QICI included: 1. Describing the Issue, 2. Building a Team, 3. Defining the Problem, 4. Choosing a Target, 5. Testing the Change, and 6. Extending Improvement Efforts.30,31 Respondents also were asked for suggestions of QIPS topics they might be interested in learning more about.

Following completion of data collection, data cleaning was undertaken using SPSS version 22 analytic software32 to transform nominal response data into numeric form and collapse some diverse variables into categories. A series of descriptive statistics were generated with cross-tabulations, and correlations were calculated among five key selected respondent characteristics the authors hypothesized might influence respondents’ overall QIPS project confidence levels (age, gender, residency year, type of program, previous QIPS experience). Participants also were asked whether they had obtained any prior experience in QIPS-type activities.

Cleaned study data were analyzed using a series of forward stepwise multinomial logistic regression modeling (MLM) procedures.33 These procedures were used to determine if any statistically significant associations existed between five resident characteristics with adequate frequencies and their respective QIPS project confidence levels. These types of analytic procedures are especially suited for smaller samples in which prospective cell frequencies (e.g., Male Pediatrics or Female Psychiatry resident respondents) are likely to be lower and not normally distributed.33

RESULTS

Descriptive Statistics: Of the 55 respondents who started the online survey, a total of 43 (78% of total respondents) completed 95% or more of the entire survey. Twelve (22%) respondents apparently either failed to note that the survey was comprised of additional survey screens or decided they were uninterested in completing the survey. The final analytic sample used for most analyses therefore was comprised of the 43 respondents who had completed most items on the entire survey.

The authors began by comparing the reported personal characteristics of the 12 respondents who failed to complete the QICI section of the survey to the 43 respondents who completed the entire survey tool. Using these fields completed by the totality of the respondent sample, a series of cross-tabulation tests comparing the two sample subgroups failed to show any statistically significant differences between partial-survey and full-survey respondents.

In the final analytic study sample (n = 43), 26 (60%) respondents were female, with respondents’ reported age category split fairly evenly between the 20 to 30 years of age and the 31 years and older categories. Respondents also were approximately uniformly divided across First-year through Third or Fourth-year residency program year categories.

In order to divide the sample into comparison groups based on type of training program, the authors created a Primary Care category (Family Medicine and Pediatrics) that comprised 54% of the analytic sample. The Non-Primary Care category was comprised of Internal Medicine, Obstetrics/Gynecology and Psychiatry respondents. A total of 18 respondents (42%) indicated that they had participated in some sort of prior QIPS project experience (see Table 1).

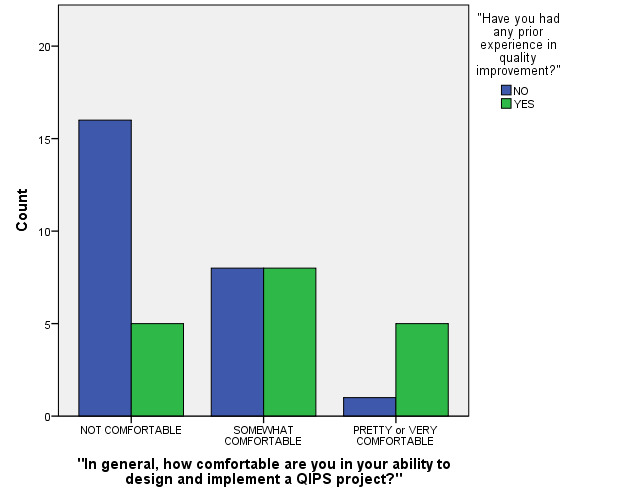

Respondents’ Overall QIPS Confidence Levels: Before respondents answered the 31 question QICI survey, they were asked a summary item concerning their overall level of confidence in designing and implementing a QIPS project. Responses on the five-point scale were grouped into three levels: a) Not Comfortable 21 respondents (49%), b) Somewhat Comfortable 16 (37%) and c) Pretty Comfortable or Very Comfortable" 6 (14%).

An initial forward stepwise MLM was conducted comparing the major resident characteristics to prospective QIPS project comfort levels. A two-tailed p-value=0.05 was selected to designate statistical significance. Each model term was entered individually in a stepwise manner to gauge their significance for the three overall comfort level response categories. Those variables initially found to exert non-significant degrees of significance (p-value of greater than 0.100) were removed from the later final MLM model.

Two MLM model terms that were not significant in the initial model were Age Category (confined to two categories since all respondents were either in their twenties or thirties) and Year of Residency Program (three categories). In the final regression model, however, three factors were each found to be statistically significant predictors of respondents’ reported confidence level categories (see Figures 1-2 and Table 2 for final model test statistics) These were: a) Gender (p=0.045), b) being in a Primary Care residency program (p=0.038) and c) having had prior reported QIPS project experience (p=0.049). In summary, males, those residents who were in a Primary Care program, and/or those who reported having had prior QIPS project experiences reported significantly higher levels of QIPS project confidence.

The final model fitting test statistic generated by the analytic software was 0.05, indicating that the set of selected model terms used performed significantly better than an intercept-only model. The McFadden Pseudo R-Square test statistic generated by the software, however, was a fairly modest 0.231. This indicated the three surviving model terms (listed in prior paragraph) explained a relatively small proportion of sample respondents’ confidence levels.33 In other words, other unmeasured resident factors likely may have influenced how this sample of residents responded to this composite confidence item.

As depicted in Table 3, the distribution of the composite QICI and six subscale scores was quite considerable, generally ranging four to fivefold. Using a series of different stepwise linear regression models, however, none of the three resident characteristics that survived in the final MRM models came up as statistically significant influences for either composite or subscale confidence scores. This finding may be conservatively interpreted as an indication that respondents’ confidence scores may have been affected by interactive (i.e., combined factor), and/or non-linear unmeasured relationships within this sample.

Eight residents responded to the open-ended “topics of interest” survey item, citing categorized areas such as: a) basic training regarding QIPS project conduction (n = 5), b) patient wait time (n =1), or c) delivering more cost effective healthcare (n = 2).

LIMITATIONS

These study results have two major limitations that the authors would like to acknowledge: a) the smaller single-setting convenience sample and b) potential methodological problems of breaking residency programs into Primary Care and Non-Primary Care categories. If the study sample had been larger and derived from multiple settings, other sample subgroup differences may have been observed. It also should be acknowledged that the authors’ decision to categorize certain respondents into a Primary Care category and others into a separate non-Primary Care category was somewhat forced by the distribution of residents across Authority Health’s training programs. Obviously, none of these survey items measured respondents’ actual competency to design or conduct QIPS projects.

CONCLUSIONS

The authors could confidently reject their null hypothesis since the results demonstrated such variation in respondents’ QIPS project confidence levels. Somewhat similar to earlier studies, significantly higher QIPS project confidence levels were obtained from males,30 primary care residents,34 and those with some form of prior QIPS project experience.35 Due to the wide variety of medical skills that many primary care physicians may need to practice, it was not unexpected that primary care respondents would report higher baseline QIPS confidence participating in a new process than their counterparts.

These results suggest the need across residency programs for systematic QIPS education to enable physician trainees to gain confidence to incorporate QIPS project principles into their medical practices. Although the authors’ level of statistical power to detect interactive or smaller subgroup differences was certainly limited in this study, the complex variations found in even this smaller sample may have implications for GME faculty. These results could suggest what QIPS content topics may be more important for suitable residency program curricula. Larger scale follow-up/longitudinal studies are certainly required to generate more generalizable results for GME settings across the country. Ideally, future studies will enable GME leaders to develop and deliver effective QIPS curricula based on resident physicians’ characteristics and preferences.

Funding

The authors report no external funding source for this study.

Conflict of Interest

The authors declare no conflict of interest.